As artificial intelligence (AI) becomes more prevalent in everyday life, questions persist about what rules are needed to govern it and who’s responsible for making them. Baobao Zhang—Maxwell Dean Associate Professor of the Politics of AI at Syracuse University’s Maxwell School of Citizenship and Public Affairs—believes public involvement in the policy process is crucial and advocates for educating the citizenry and developing AI literacy to spark informed debates about what action the government should take.

“This is a policy challenge that’s continually evolving, which makes it super challenging,” she says. “Oftentimes, we talk about ‘How do we future-proof policy?’ and it’s quite difficult. But that doesn’t mean we shouldn’t anticipate what could be the potential consequences of AI.”

Regulating fast-moving technology exposes a tension between tech companies pushing the envelope of innovation and those trying to keep the public safe. “I’m concerned with the unknowns where large numbers of people have access to this technology, and it’s really shaping their beliefs about what’s real and what’s not in the world, or what’s healthy or not healthy,” Zhang says. “It’s a big experiment that we’re all part of.”

Zhang—who teaches and researches AI ethics and governance and is co-editor of The Oxford Handbook of AI Governance (Oxford University Press, 2024)—gathers insights on the public’s perception of AI, evaluates the technology’s opportunities and risks and how the government should regulate it. She’s investigated the ethics of large language models, which have produced unsafe recommendations in response to prompts, and sees deliberative democracy—informed public discussion designed to shape political decisions—as key to good policy.

“We have all these myths about what AI is and what it can do,” she says. “There’s a lot of hype and then there’s reality, and it’s important to separate the hype from the reality. It’s a question of how we scale public education to increase AI literacy so that people can have informed debates about what the government should or should not do.”

Educating Citizens on AI

Maxwell School professor Baobao Zhang wants her students to understand the benefits and consequences of artificial intelligence and the challenges of creating policies to regulate it.

In 2022, as one of 15 scholars nationwide named an AI2050 Early Career Fellow by the Schmidt Sciences philanthropic organization, Zhang helped organize the first U.S. National Public Assembly on AI Governance. An expert in research methodology, she worked with a team to select 40 participants, representative of the U.S. public’s diverse demographics, from among 2,000 survey respondents. Over 40 hours of Zoom meetings, the participants heard from computer scientists, policy analysts and other experts who explained AI technical information and policy challenges to them. After discussion and debate, they made AI policy suggestions.

Among the findings, Zhang says, was that general AI systems—which include generative AI platforms—present a greater risk than specific AI systems—which are designed to perform limited tasks—because of their ability to do many things, making it difficult to predict potentially harmful outcomes. Generative AI, for example, can provide erroneous medical advice or create deepfakes to manipulate people. AI avatars can be used to mislead users who believe they are real, or AI chatbot companions can unduly influence people who establish relationships with them.

“People were obviously concerned about some of the consequences of AI we’ve seen—for instance, young people committing suicide or self-harming because they’re over-relying on chatbots as therapists,” she says. “There are all these cases where people are using AI for what it’s not intended to be used for, because there are no guardrails.”

Zhang also notes that participants’ responses and concerns crossed political boundaries. “When you drill down to the nitty-gritty,” she says, “a lot of people agree that we should be taking these risks seriously.”

Maxwell colleague Johannes Himmelreich, an associate professor of public administration and international affairs who studies philosophical and ethical issues associated with AI, sees Zhang’s commitment to deliberative democracy as a significant asset in helping shape AI regulations and policy. “Baobao’s forums give citizens both an opportunity to better understand how to use AI safely and a voice in governance—two things traditional democracy struggles to provide for fast-moving technology,” he says.

AI in the Workplace

With the support of a National Science Foundation CAREER grant, Zhang is studying the impact of AI in the workplace.

This spring, Zhang was awarded a prestigious five-year National Science Foundation CAREER grant to investigate both the experiences of workers impacted by generative AI and future workers’ attitudes toward the technology. She plans to survey 1,500 workers and then conduct in-depth interviews and workshops with 40 workers to examine their perceptions of generative AI.

She’ll explore their views on automation, job displacement, diminishing economic opportunities, AI workplace surveillance and other topics—as well as work quality and positive experiences such as improved productivity. “One thing we theorize is that how you feel about generative AI in the workplace probably depends on your power in the workplace,” Zhang says. “If you have a lot of autonomy and leadership, you might feel less threatened than someone who’s starting out and is in a more precarious position.”

In addition, she’ll survey high school and college students and host workshops to gauge their attitudes about future employment and how the AI era may influence their decisions on pursuing additional schooling, training and their careers.

Giving Citizens a Voice

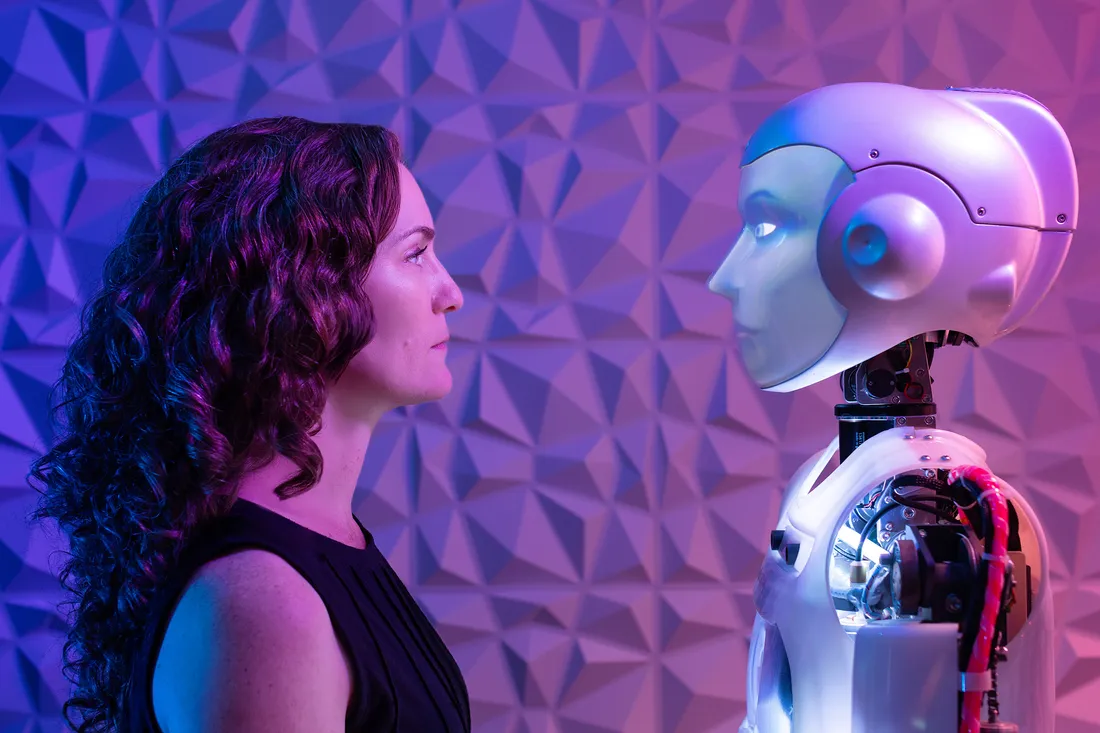

One of Zhang’s concerns is how generative AI can be used to manipulate or deceive others, including creating fake people and personalities through AI avatars.

School of Information Studies doctoral student Roman Saladino ’24 developed an interest in AI and politics as an undergraduate political science major and reached out to Zhang, who encouraged his interest, mentored him and provided opportunities, including work as a research assistant in summer 2024. “I primarily worked on hand-coding news articles pertaining to the future of work and AI. It was a great experience being able to learn fundamental parts of the research process,” he says. “What is really special about Dr. Zhang as a person, researcher and teacher is her care and dedication to her field and others that want to join.”

Saladino is especially excited about her work on AI democratization at a time when the public has little input on the direction that the government and tech companies may take the technology. “Dr. Zhang has done a really cool thing by researching how we can democratize AI, in which the consumer has a say in how these products are being developed, utilized and regulated,” he says.

Along with an informed public, Zhang believes human monitoring—for instance, employing AI auditors, which could evolve into an industry, she says—is essential to maintaining safe control of the technology. “What’s concerning to me is that we are now moving away from just using generative AI as a tool that still requires a lot of human input to the idea of AI agents, which can act more autonomously with more high-level abstract directions,” she says. “In theory, that sounds good, but in principle, because there’s not as much human control, it can potentially lead to disastrous consequences.”